A new concept is emerging: vibe marketing. Coined in tech, vibe marketing now describes a hands-on-yet-automated approach to managing customer engagement. In loyalty marketing, it means using intelligent tools to create highly personalized, data-driven programs—without getting lost in technical complexity. Rather than replace marketers, vibe marketing frees them to focus on strategy and creativity. For those looking to scale loyalty efforts with precision and agility, VEMT provides the structure and automation needed to bring the right “vibe” to every customer interaction.

Can we listen to your thoughts in a few years?

By Jeroen Nas

In the last weeks, big names made interesting statements: Elon Musk announced a company called ‘Neuralink’. It is supposed to free us from the limited capacity interface our brains have with the outside world. Now human output is limited to speaking or writing, which delivers a limited amount of words/minute, while we are capable of thinking much faster than that. Neuralink – supposedly – will enable our brains to output data much faster, creating a significant next jump in human productivity. In other words, it will allow us to produce more ‘kitten posts’ and likes. We will have to see. Key point of this development is that brains become machine readable. The implications of that go beyond Neuralink’s current mission. It would enable more possibilities than ‘just’ productive output… at least in theory.

Mark Zuckerberg went a bit more practical: Facebook plans to read thoughts within a few years to literally speed up your writing, indeed to create more ‘kitten posts’ or other useful ‘products of the mind’. This plan also contains a strong strong element of making our brains to become machine readable.

So…. will it be possible to read your brains? And what are the commercial and moral implications?

Interesting questions. Although both initiatives have snappy explanations on how this will be possible, after some days of reflections it seems that this is unlikely to really happen within a few years. Still, as Amara’s law states: technology is usually overrated on the short run, but underrated on the long run. It could be the same in this case.

Jason Hreha – the upcoming behavioral scientist that recently started publishing in daily hype mode – doesn’t think so. In his article about Facebook’s attempt to start reading thoughts: Don’t worry, Facebook won’t be able to listen in on your thoughts, he explains why this is not possible with current technologies. He evaluates options from ‘implanted electrodes’ to the cooler and less invasive sounding ‘Near Infrared Spectroscopy’. Without scary and very long operations, it won’t happen, he concludes. Although people engage in scarier things while showing off on social media (like selfies in life threatening situations), I do expect that voluntarily throwing yourself at the surgeon’s table for faster typing skills will indeed only reach a limited audience.

But… suppose Hreha has missed out on a magical and mysterious technology development that Mark and Elon have brewed in one of their labs. Imagine that before next Christmas, not only Santa will be able to read what’s on your wishlist, but Facebook will be as well. It could start bombarding you with ‘relevant’ ads… and so. What would be the implications of such a break through?

Apart from technicalities as ‘how close you should get to someone’s brain to actually read it clearly’, it opens up a world of possibilities. In the amazing series ‘Black Mirror’ (season 1/episode 3: ‘The entire history of you’), you can experience what the effect might be on our personal lives. Only Saints might survive.

Commercially, it would mean that companies (read: Facebook) will get access to your desires, needs and dislikes. This is definitely the holy grail for advertisers, conversion optimizers and loyalty or persuasion marketers. So you would think. No more customer profiling and making educated guesses on what type of micro segment you would belong to; but just read your brain and know what can be sold to you, what arguments will resonate with you and how much are you actually willing to pay for the product.

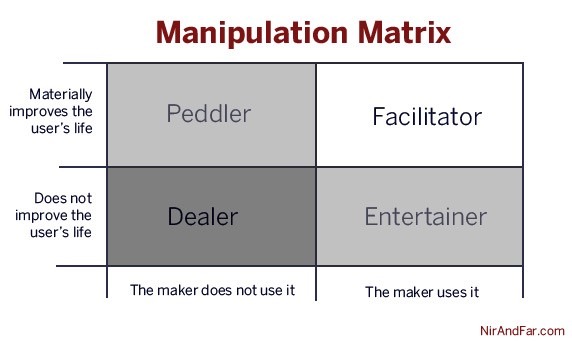

Hmm, it does sound like something might be wrong in that Marketing Nirvana, doesn’t it? Nir Eyal tackled that issue in his excellent article ‘The Morality of Manipulation‘, published today in Medium. Even if reading our brains would be possible, you could consider not doing it (or making it a criminal offense) because it could lead to ‘black hat’ manipulation. As Eyal decribes, manipulation is not always wrong. Weight Watchers is an example. That’s a form of voluntary submission to being manipulated into desired behavior, leading to great results. Well…. often. My personal trainer thrives on that principle, and she should.

Eyal comes up with a simple tool to know if it’s wrong or not: the manipulation matrix. It boils down to the principle that if the maker of a product has (ever) used it him/herself with good intentions and believes that it contributes to the quality of life, than – even if the product is addictive and/or has aspects of manipulation – it is not a ‘bad’ manipulative product (or service).

Well, I’ll let that thought sink in for a few days. For now, I just hope that Zuck and Musk do not believe too much in what they are doing; otherwise they might get away with it. Morally.