A new concept is emerging: vibe marketing. Coined in tech, vibe marketing now describes a hands-on-yet-automated approach to managing customer engagement. In loyalty marketing, it means using intelligent tools to create highly personalized, data-driven programs—without getting lost in technical complexity. Rather than replace marketers, vibe marketing frees them to focus on strategy and creativity. For those looking to scale loyalty efforts with precision and agility, VEMT provides the structure and automation needed to bring the right “vibe” to every customer interaction.

Doing experiments with Sinan Aral

For some, “doing experiments” sounds abstract (or even a bit weird), but when you’re in Marketing (or any other behavioral science for that matter), you should know that doing experiments should be the basis for many of your decisions. Testing scenarios, hypothesis and/or strategies in a scientific way provides companies a data driven decision making process where ‘guesswork’ and gut feeling are no longer the main drivers for marketing decisions.

One of the “gurumeisters” of scientific experimentation and social influence is Sinan Aral. This young and successful MIT professo r visited Amsterdam this week, invited by the equally inspiring Arjan Haring from The Control Group, and showed a selected group of individuals how the real deal is done, based on a case provided by Ahold-Delhaize, the Dutch super market giant.

r visited Amsterdam this week, invited by the equally inspiring Arjan Haring from The Control Group, and showed a selected group of individuals how the real deal is done, based on a case provided by Ahold-Delhaize, the Dutch super market giant.

VEMT was happy to be part of this experiment, and we’d like to share our experiences forward, so you might be able to profit from that as well.

VEMT’s Learnings

Creativity kills experimentation quality

Ok, this is a provocative, counter intuitive headline just to get your attention, but we’ll demonstrate it’s true in this context. Applying data science, experimentation and marketing to a real life case brings out an amazing creativity in people. It’s more than impressive to see how a group of strangers are immediately united and fired-up to deliver when they are assigned to a group and given a goal: it pushes performance. This is easiest shown in the group by launching ideas. Each individual, by lack of an agreed process and leader, starts to launch improvement ideas first. What if Ahold would do this? What if we would could We learned that’s right where a part of the challenge lies: all participants, without exception, started to generate improvement ideas for the case, rather than ideas about how to test case elements for improvement. This tiny difference in words is an essential difference in approach and where we see our in-real-life clients get stuck as well. Obviously, it is much more rewarding for humans to generate new ideas, than to test which idea is best. Availability bias aside.

Your best experiments will be killed

Another counter-intuitive statement, but less provocative. Most data scientists and marketers will experience this one in practice some day. When you have a experiment running that shows clear beneficial results for at least one of the test scenarios, it is costly to keep the worst performing scenarios running and have opportunity costs accumulating. The present Chief Data Scientist of Booking.com referred to a study from Google (couldn’t find it yet) in which they claim that some test actually score quite different when they run for a longer term. This might be a reason to stick to your test setup and demand a longer running period… of course only if you will be capable of minimizing the exogenous effects in the test for that longer period.

Scale delivers extra insights

Sinan delivered some sheets with insight in some of the very large scale experiments he has been running with larger internet companies like Facebook. One of the interesting insights he demonstrated was that if you run an test in which the average result in both scenarios is not significantly different, it doesn’t always mean that outcome is not different at all.

Sinan delivered some sheets with insight in some of the very large scale experiments he has been running with larger internet companies like Facebook. One of the interesting insights he demonstrated was that if you run an test in which the average result in both scenarios is not significantly different, it doesn’t always mean that outcome is not different at all.

If you have sufficient participants in the experiment to dive deep (and significant) into the spread of the outcome (see picture), then you can see that – although the average stays is not very different in both tests, the extremes show a big difference. Donald Trump for example, has this effect on people’s voting behavior, but you can also perceive it in business or health cases.

To use these type of insights, it is clear that your test data needs to be voluminous, as each individual segment needs to be significantly large to be distinguished from the others.

Marriage locks you out (or in)

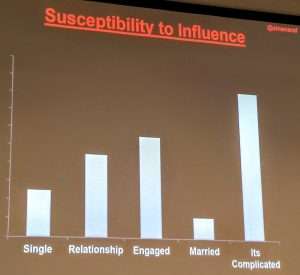

In the early evening, Sinan presented some more interesting examples of social influencing tests he has executed over the years. A nice one to quote at your next cocktail drinks is the research he did with Facebook on susceptibility of individuals split out on relationship status (as reported by the individuals themselves). As you can see in the results overview, social influence has got more grip on you as you move deeper into a relationship…until the moment where your significant other engages into a legal relationship with you. Interpretation of the causality of this result is a nice one during the cocktail drinks. Just make sure you know where your significant other is when you make your loud statements after the third cocktail or so.

In the early evening, Sinan presented some more interesting examples of social influencing tests he has executed over the years. A nice one to quote at your next cocktail drinks is the research he did with Facebook on susceptibility of individuals split out on relationship status (as reported by the individuals themselves). As you can see in the results overview, social influence has got more grip on you as you move deeper into a relationship…until the moment where your significant other engages into a legal relationship with you. Interpretation of the causality of this result is a nice one during the cocktail drinks. Just make sure you know where your significant other is when you make your loud statements after the third cocktail or so.

Because then, your next move could be into the ‘It’s complicated status’, and that seems to be a guarantee for losing your firmness and straight back: you will be leaning towards just any opinion out there!

How can you use these insights for loyalty programs?

In the spirit of true social experimenting: try things before you make decisions based on assumptions. Do not use the above insights as ‘rules of thumb’ or ‘proof for your next proposal’. Instead, use the framework and examples to design some great tests, run them long enough to see longer term impact and run them at scale. We’ll be right there to help you set them up, and process the results into your next great new feature or campaign.